AWS Lambda is a compute service that lets you run code without provisioning or managing servers.

Lambda runs your code on a high-availability compute infrastructure and performs all of the administration of the compute resources, including server and operating system maintenance, capacity provisioning and automatic scaling, and logging. You organize your code into Lambda functions. The Lambda service runs your function only when needed and scales automatically.

Lambda is an ideal compute service for application scenarios that need to scale up rapidly, and scale down to zero when not in demand. For example, you can use Lambda for:

File processing: Use Amazon Simple Storage Service (Amazon S3) to trigger Lambda data processing in real time after an upload.

Stream processing: Use Lambda and Amazon Kinesis to process real-time streaming data for application activity tracking, transaction order processing, clickstream analysis, data cleansing, log filtering, indexing, social media analysis, Internet of Things (IoT) device data telemetry, and metering.

Web applications: Combine Lambda with other AWS services to build powerful web applications that automatically scale up and down and run in a highly available configuration across multiple data centers.

IoT backends: Build serverless backends using Lambda to handle web, mobile, IoT, and third-party API requests.

Mobile backends: Build backends using Lambda and Amazon API Gateway to authenticate and process API requests. Use AWS Amplify to easily integrate with your iOS, Android, Web, and React Native frontends.

Key features of AWS Lambda functions include:

Event-driven computing

Pay-per-use pricing model

Automatic scaling

Support for multiple programming languages

Integration with AWS services

Versioning and aliases

Concurrency control

Security and compliance

Monitoring and logging capabilities

Global availability

Objectives

Here, we will create the lambda function that resize the image uploaded into origin bucket into destination bucket. Let's begin...

Steps

step1. Allow Access to the IAM user

step 2. Create the two S3 bucket. One for origin and one for destination of resized image

step 3. Go to the IAM then policy and create the policy and modify the policy as

assume(resizerPolicy)

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:PutLogEvents",

"logs:CreateLogGroup",

"logs:CreateLogStream"

],

"Resource": "arn:aws:logs:*:*:*"

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": "arn:aws:s3:::my-real-bucket-69/*"

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": "arn:aws:s3:::my-thumbnail-image-69/*"

}

]

}

step 4. Create role (assume resizerRole) and attach the policy created above

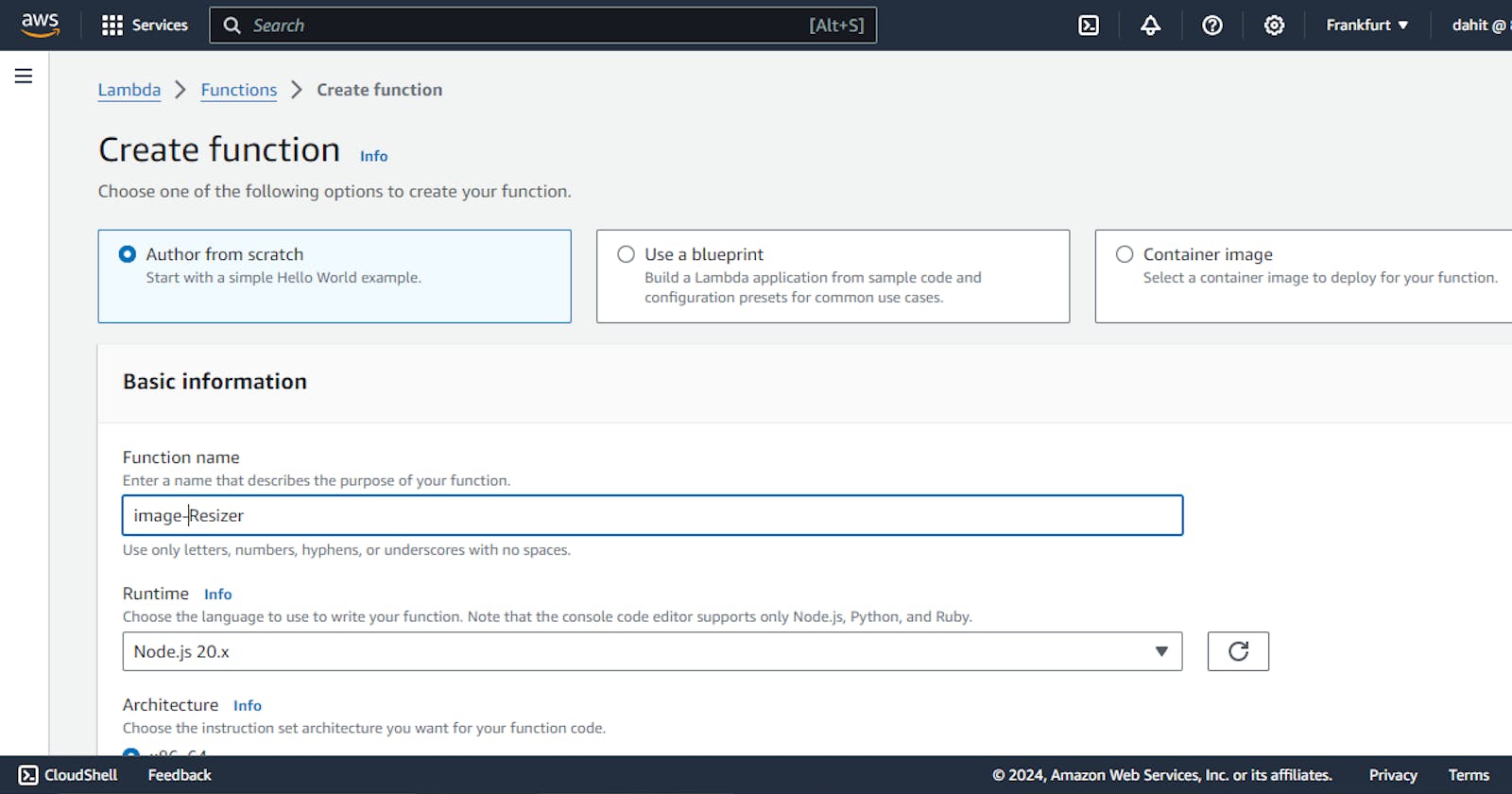

step 5. Create lambda function as

step 6. Now, create the node js project

npm init

npm install aws-sdk jimp --save

const AWS = require('aws-sdk');

var jimp = require("jimp");

const s3 = new AWS.S3();

const DEST_BUCKET = process.env.DEST_BKT_PATH;

async function imgResize(data) {

const buffer = Buffer.from(data);

const res = await jimp.read(buffer);

return await res.resize(200, 200);

}

exports.handler = async (event) => {

let myFileOps = event.Records.map(async (record) => {

let bucket = record.s3.bucket.name;

let filename = record.s3.object.key;

var params = {

Bucket: bucket,

Key: filename

};

let inputData = await s3.getObject(params).promise();

const img = await imgResize(inputData.Body);

let resizedBuffer;

await img.getBuffer(jimp.MIME_JPEG, (err, buffer) => {

resizedBuffer = buffer;

});

let targetFilename = filename;

var params = {

Bucket: DEST_BUCKET,

Key: targetFilename,

Body: resizedBuffer,

ContentType: 'image/jpeg'

};

await s3.putObject(params).promise();

});

await Promise.all(myFileOps);

console.log("done");

return "done";

}

step 7. Create zip file along with node_modules as

step 8. Now upload index.zip in the source bucket and also sd.jpg for testing

step 9. Copy the object url of the index.zip

step 10. Then, paste to the code of the lambda function

step 11. Create the trigger

step 12. Also update the general configuration

step 13. Setup the enviroment variable

step 14 Create the test function and in the event json

{

"Records": [

{

"eventVersion": "2.0",

"eventSource": "aws:s3",

"awsRegion": "us-east-1",

"eventTime": "1970-01-01T00:00:00.000Z",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "EXAMPLE"

},

"requestParameters": {

"sourceIPAddress": "127.0.0.1"

},

"responseElements": {

"x-amz-request-id": "EXAMPLE123456789",

"x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH"

},

"s3": {

"s3SchemaVersion": "1.0",

"configurationId": "testConfigRule",

"bucket": {

"name": "my-origin-bucket-69",

"ownerIdentity": {

"principalId": "EXAMPLE"

},

"arn": "arn:aws:s3:::my-origin-bucket-69"

},

"object": {

"key": "sd.jpg",

"size": 1024,

"eTag": "0123456789abcdef0123456789abcdef",

"sequencer": "0A1B2C3D4E5F678901"

}

}

}

]

}

step 15. Now save and test the function

step 16. Open the destination S3 bucket

Then finally we get resized image

Now whenever you upload image in the origin bucket, you will get the resized image in the destination S3 bucket.

Thanks for reading ..................................